Strange Error uploading artifacts in Azure DevOps pipeline

I have a pipeline that worked perfectly for Years, but yesterday a build failed while uploading artifacts, I queued it again and it still failed, so it does not seems to be an intermittent error (network could be unreliable). I was really puzzled because from the last good build we changed 4 C# files, nothing really changed that can justify the failing and also we have no network problem that can justify problem uploading artifacts to Azure DevOps.

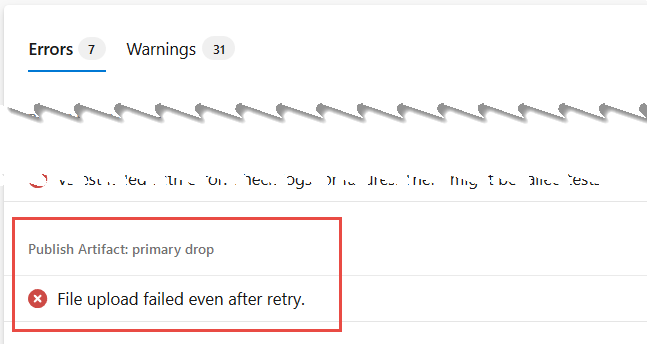

Well, the error was also not telling me anything, it is a simple statement that file upload failed and since it told me that there were a retry, definitely this is not an intermittent network error.

Figure 1: Upload file error, nothing is suggesting me the real error

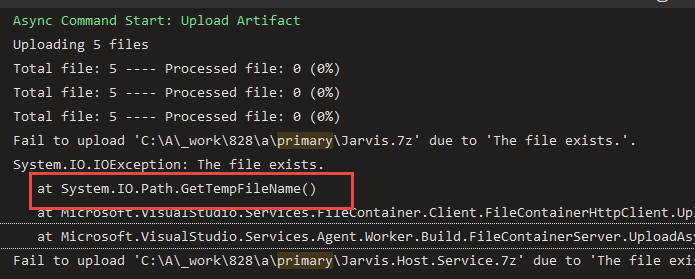

Digging deeper in the execution logs I found something really strange, an error after a GetTempFileName() function call.

Figure 2: The real error reported in the detail log

Ok, this is definitely weird, it seems that a task is calling GetTempFileName() method, but the call failed with a strange error “The file already exists”; digging around in the internet it seems that the error can be generated by a temp directory with too much files (someone claims this limit to be 65535).

Temp directories tends to grow really big and if you have plenty of space in your HDD, usually they are not cleared, but you can incur in problems if the number of files becomes too high.

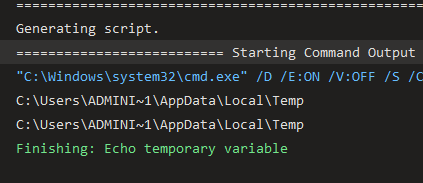

The trick part is knowing which is the real temp directory used by the agent that runs the build , so I’ve added an Execute Command task to simply echo “%TEMP% variable.

Figure 3: Dumping temp variable inside a build

Checking the build server, I verified that temp folder contains more than 100k files for several gigabytes of space. After deleting all those temp files, the build started working again and the team was happy again. Yesterday was a strange day, because this is not the weirdest bug I had to solve :P. (another post will follow).

Gian Maria.